The Past, Present, and Future of Data Quality Management: Understanding Testing, Monitoring, and Data Observability in 2024

Data high quality monitoring. Data testing. Data observability. Say that 5 instances quick.

Are they totally different phrases for a similar factor? Unique approaches to the identical downside? Something else solely?

And extra importantly-do you actually need all three?

Like all the things in knowledge engineering, knowledge high quality administration is evolving at lightning velocity. The meteoric rise of knowledge and AI in the enterprise has made knowledge high quality a zero day threat for contemporary businesses-and THE downside to unravel for knowledge groups. With a lot overlapping terminology, it isn’t at all times clear the way it all matches together-or if it matches collectively.

But opposite to what some may argue, knowledge high quality monitoring, knowledge testing, and knowledge observability aren’t contradictory and even various approaches to knowledge high quality management-they’re complementary parts of a single resolution.

In this piece, I’ll dive into the specifics of these three methodologies, the place they carry out finest, the place they fall quick, and how one can optimize your knowledge high quality observe to drive knowledge belief in 2024.

Understanding the fashionable knowledge high quality downside

Before we will perceive the present resolution, we have to perceive the problem-and the way it’s modified over time. Let’s contemplate the next analogy.

Imagine you are an engineer answerable for a neighborhood water provide. When you took the job, town solely had a inhabitants of 1,000 residents. But after gold is found beneath the city, your little neighborhood of 1,000 transforms right into a bona fide metropolis of 1,000,000.

How may that change the best way you do your job?

For starters, in a small surroundings, the fail factors are comparatively minimal-if a pipe goes down, the foundation trigger might be narrowed to at least one of a pair anticipated culprits (pipes freezing, somebody digging into the water line, the same old) and resolved simply as shortly with the assets of one or two staff.

With the snaking pipelines of 1 million new residents to design and keep, the frenzied tempo required to fulfill demand, and the restricted capabilities (and visibility) of your workforce, you now not have the the identical skill to find and resolve each downside you anticipate to pop up-much much less be looking out for those you do not.

The fashionable knowledge surroundings is identical. Data groups have struck gold, and the stakeholders need in on the motion. The extra your knowledge surroundings grows, the tougher knowledge high quality becomes-and the much less efficient conventional knowledge high quality strategies will probably be.

They aren’t essentially incorrect. But they don’t seem to be sufficient both.

So, what is the distinction between knowledge monitoring, testing, and observability?

To be very clear, every of these strategies makes an attempt to handle knowledge high quality. So, if that is the issue it’s essential to construct or purchase for, anybody of these would theoretically verify that field. Still, simply because these are all knowledge high quality options doesn’t suggest they’re going to truly remedy your knowledge high quality downside.

When and how these options ought to be used is a bit more complicated than that.

In its easiest phrases, you’ll be able to assume of knowledge high quality as the issue; testing and monitoring as strategies to determine high quality points; and knowledge observability as a unique and complete method that mixes and extends each strategies with deeper visibility and decision options to unravel knowledge high quality at scale.

Or to place it much more merely, monitoring and testing determine problems-data observability identifies issues and makes them actionable.

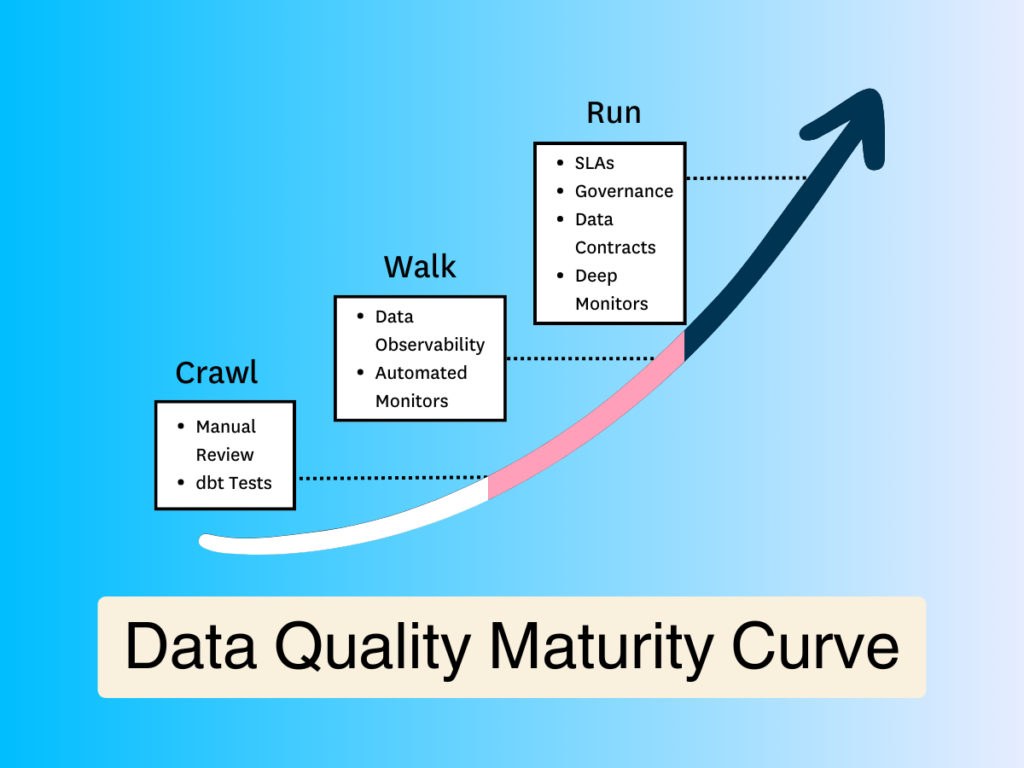

Here’s a fast illustration which may assist visualize the place knowledge observability matches in the knowledge high quality maturity curve.

Now, let’s dive into every technique in a bit extra element.

Data testing

The first of two conventional approaches to knowledge high quality is the information check. Data high quality testing (or just knowledge testing) is a detection technique that employs user-defined constraints or guidelines to determine particular identified points inside a dataset in order to validate knowledge integrity and guarantee particular knowledge high quality requirements.

To create a knowledge check, the information high quality proprietor would write a sequence of guide scripts (typically in SQL or leveraging a modular resolution like dbt) to detect particular points like extreme null charges or incorrect string patterns.

When your knowledge needs-and consequently, your knowledge high quality needs-are very small, many groups will have the ability to get what they want out of easy knowledge testing. However, As your knowledge grows in measurement and complexity, you may shortly end up dealing with new knowledge high quality issues-and needing new capabilities to unravel them. And that point will come a lot before later.

While knowledge testing will proceed to be a vital element of a knowledge high quality framework, it falls quick in a couple of key areas:

- Requires intimate knowledge data-data testing requires knowledge engineers to have 1) sufficient specialised area data to outline high quality, and 2) sufficient data of how the information may break to set-up exams to validate it.

- No protection for unknown points-data testing can solely let you know concerning the points you anticipate to find-not the incidents you do not. If a check is not written to cowl a selected concern, testing will not discover it.

- Not scalable-writing 10 exams for 30 tables is kind of a bit totally different from writing 100 exams for 3,000.

- Limited visibility-Data testing solely exams the information itself, so it may’t let you know if the problem is known as a downside with the information, the system, or the code that is powering it.

- No decision-even if knowledge testing detects a difficulty, it will not get you any nearer to resolving it; or understanding what and who it impacts.

At any degree of scale, testing turns into the information equal of yelling “hearth!” in a crowded road and then strolling away with out telling anybody the place you noticed it.

Data high quality monitoring

Another traditional-if considerably extra sophisticated-approach to knowledge high quality, knowledge high quality monitoring is an ongoing resolution that regularly displays and identifies unknown anomalies lurking in your knowledge by way of both guide threshold setting or machine studying.

For instance, is your knowledge coming in on-time? Did you get the quantity of rows you have been anticipating?

The main profit of knowledge high quality monitoring is that it offers broader protection for unknown unknowns, and frees knowledge engineers from writing or cloning exams for every dataset to manually determine frequent points.

In a way, you can contemplate knowledge high quality monitoring extra holistic than testing as a result of it compares metrics over time and allows groups to uncover patterns they would not see from a single unit check of the information for a identified concern.

Unfortunately, knowledge high quality monitoring additionally falls quick in a couple of key areas.

- Increased compute price-data high quality monitoring is dear. Like knowledge testing, knowledge high quality monitoring queries the information directly-but as a result of it is meant to determine unknown unknowns, it must be utilized broadly to be efficient. That means massive compute prices.

- Slow time-to-value-monitoring thresholds might be automated with machine studying, however you may nonetheless must construct every monitor your self first. That means you may be doing quite a bit of coding for every concern on the entrance finish and then manually scaling these displays as your knowledge surroundings grows over time.

- Limited visibility-data can break for all types of causes. Just like testing, monitoring solely appears on the knowledge itself, so it may solely let you know that an anomaly occurred-not why it occurred.

- No decision-while monitoring can actually detect extra anomalies than testing, it nonetheless cannot let you know what was impacted, who must learn about it, or whether or not any of that issues in the primary place.

What’s extra, as a result of knowledge high quality monitoring is simply simpler at delivering alerts-not managing them-your knowledge workforce is way extra prone to expertise alert fatigue at scale than they’re to truly enhance the information’s reliability over time.

Data observability

That leaves knowledge observability. Unlike the strategies talked about above, knowledge observability refers to a complete vendor-neutral resolution that is designed to supply full knowledge high quality protection that is each scalable and actionable.

Inspired by software program engineering finest practices, knowledge observability is an end-to-end AI-enabled method to knowledge high quality administration that is designed to reply the what, who, why, and how of knowledge high quality points inside a single platform. It compensates for the restrictions of conventional knowledge high quality strategies by leveraging each testing and absolutely automated knowledge high quality monitoring right into a single system and then extends that protection into the information, system, and code ranges of your knowledge surroundings.

Combined with important incident administration and decision options (like automated column-level lineage and alerting protocols), knowledge observability helps knowledge groups detect, triage, and resolve knowledge high quality points from ingestion to consumption.

What’s extra, knowledge observability is designed to supply worth cross-functionally by fostering collaboration throughout groups, together with knowledge engineers, analysts, knowledge house owners, and stakeholders.

Data observability resolves the shortcomings of conventional DQ observe in 4 key methods:

- Robust incident triaging and decision-most importantly, knowledge observability offers the assets to resolve incidents sooner. In addition to tagging and alerting, knowledge observability expedites the root-cause course of with automated column-level lineage that lets groups see at a look what’s been impacted, who must know, and the place to go to repair it.

- Complete visibility-data observability extends protection past the information sources into the infrastructure, pipelines, and post-ingestion techniques in which your knowledge strikes and transforms to resolve knowledge points for area groups throughout the corporate

- Faster time-to-value-data observability absolutely automates the set-up course of with ML-based displays that present immediate protection right-out-of-the-box with out coding or threshold setting, so you will get protection sooner that auto-scales along with your surroundings over time (together with customized insights and simplified coding instruments to make user-defined testing simpler too).

- Data product well being monitoring-data observability additionally extends monitoring and well being monitoring past the standard desk format to observe, measure, and visualize the well being of particular knowledge merchandise or important belongings.

Data observability and AI

We’ve all heard the phrase “rubbish in, rubbish out.” Well, that maxim is doubly true for AI functions. However, AI would not merely want higher knowledge high quality administration to tell its outputs; your knowledge high quality administration must also be powered by AI itself in order to maximise scalability for evolving knowledge estates.

Data observability is the de facto-and arguably only-data high quality administration resolution that permits enterprise knowledge groups to successfully ship dependable knowledge for AI. And half of the best way it achieves that feat is by additionally being an AI-enabled resolution.

By leveraging AI for monitor creation, anomaly detection, and root-cause evaluation, knowledge observability allows hyper-scalable knowledge high quality administration for real-time knowledge streaming, RAG architectures, and different AI use-cases.

So, what’s subsequent for knowledge high quality in 2024?

As the information property continues to evolve for the enterprise and past, conventional knowledge high quality strategies cannot monitor all of the methods your knowledge platform can break-or make it easier to resolve it once they do.

Particularly in the age of AI, knowledge high quality is not merely a enterprise threat however an existential one as properly. If you’ll be able to’t belief the whole thing of the information being fed into your fashions, you’ll be able to’t belief the AI’s output both. At the dizzying scale of AI, conventional knowledge high quality strategies merely aren’t sufficient to guard the worth or the reliability of these knowledge belongings.

To be efficient, each testing and monitoring have to be built-in right into a single platform-agnostic resolution that may objectively monitor all the knowledge environment-data, techniques, and code-end-to-end, and then arm knowledge groups with the assets to triage and resolve points sooner.

In different phrases, to make knowledge high quality administration helpful, fashionable knowledge groups want knowledge observability.

First step. Detect. Second step. Resolve. Third step. Prosper.

This story was initially revealed right here.

The submit The Past, Present, and Future of Data Quality Management: Understanding Testing, Monitoring, and Data Observability in 2024 appeared first on Datafloq.