Mastering the Basics: An Essential Guide to Reinforcement Learning

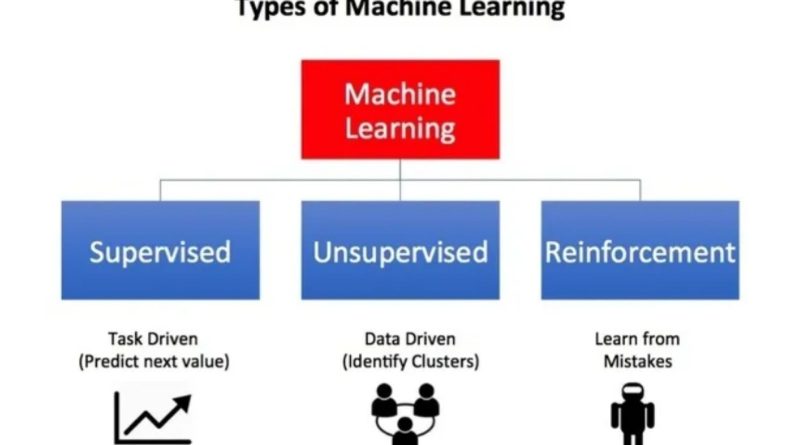

Welcome to this informative piece! If you’ve got discovered your self right here, you are seemingly well-versed in the rising significance of machine studying. The relevance of this subject has surged impressively in recent times, fueled by the rising calls for in numerous enterprise sectors and the fast progress of know-how. Machine studying is an in depth panorama with a plethora of algorithms that predominantly fall into three most important classes:

- Supervised Learning: These algorithms intention to mannequin the relationship between options (impartial variables) and a goal label, primarily based on a set of observations. The resultant mannequin is then employed to predict the label of recent observations, utilizing the outlined options.

- Unsupervised Learning: These are algorithms that try to uncover hidden patterns or intrinsic constructions in unlabeled information.

- Reinforcement Learning: Operating on the precept of motion and reward, these algorithms allow an agent to learn the way to obtain a aim by iteratively figuring out the reward related to its actions.

In this text, our focus might be on offering you with an outline of the generally utilized reinforcement studying algorithms. Reinforcement Learning (RL) is undoubtedly one among the most thriving analysis domains in up to date Artificial Intelligence, and its recognition reveals no indicators of diminishing. To equip you with a powerful basis in RL, let’s dive into 5 essential parts you want to grasp as you embark on this thrilling journey.

So, with out additional ado, let’s delve in.

Understanding Reinforcement Learning: How does it differ from different ML strategies?

- Reinforcement Learning (RL) is a subset of machine studying that empowers an agent to be taught from an interactive setting by means of a strategy of trial and error, harnessing suggestions from its personal actions and experiences.

While supervised studying and RL each contain mapping between enter and output, they diverge by way of the suggestions supplied to the agent. In supervised studying, the agent receives the right set of actions to carry out a job as suggestions. Conversely, RL makes use of a system of rewards and punishments as indicators for optimistic and damaging behaviors.

When in contrast to unsupervised studying, RL differs primarily in its targets. Unsupervised studying’s aim is to uncover similarities and variations amongst information factors. In distinction, the aim in RL is to develop an acceptable motion mannequin that maximizes the agent’s complete cumulative reward. The picture beneath illustrates the action-reward suggestions loop of a typical RL mannequin.

Formulating a Basic Reinforcement Learning Problem:

- Key Concepts and Steps A elementary understanding of Reinforcement Learning (RL) includes greedy some essential phrases that define the main parts of an RL drawback:

- Environment: The tangible world through which the agent operates.

- State: The agent’s present circumstance or place.

- Reward: Feedback the agent receives from the setting.

- Policy: The technique that maps the agent’s state to its actions.

- Value: The potential reward an agent would garner by performing an motion in a selected state.

An participating approach to illustrate RL issues is thru video games. Let’s take the instance of PacMan.

Here, the agent (PacMan) goals to eat meals in the grid whereas eluding ghosts. In this situation, the grid world represents the interactive setting the place the agent acts. The agent good points a reward for consuming meals and receives a penalty if it will get killed by a ghost (leading to a lack of the recreation). The states on this case are the places of the agent inside the grid world, and the agent successful the recreation represents the complete cumulative reward.

When constructing an optimum coverage, the agent confronts a predicament between exploring new states and concurrently maximizing its general reward. This is called the Exploration vs Exploitation trade-off. The agent may want to make short-term sacrifices to obtain a steadiness and thus accumulate sufficient information to make the most helpful general resolution in the future.

Markov Decision Processes (MDPs) provide a mathematical framework to describe an setting in RL, and virtually all RL issues may be formulated utilizing MDPs. An MDP features a set of finite setting states (S), a set of attainable actions (A(s)) in every state, a real-valued reward operate (R(s)), and a transition mannequin (P(s’, s | a)). However, real-world environments usually lack any prior information about the dynamics of the setting. In such situations, model-free RL strategies show helpful.

One such generally used model-free strategy is Q-learning, which might be employed to create a self-playing PacMan agent. The idea central to Q-learning is the updating of Q values, which characterize the worth of performing motion ‘a’ in state ‘s’. The subsequent worth replace rule kinds the crux of the Q-learning algorithm.

Popular Reinforcement Learning Algorithms:

- An Overview Two model-free RL algorithms usually utilized in the subject are Q-learning and SARSA (State-Action-Reward-State-Action). These algorithms differ primarily of their exploration methods, whereas their exploitation methods stay pretty related. Q-learning is an off-policy technique through which the agent learns worth primarily based on an optimum motion ‘a*’ derived from one other coverage. On the different hand, SARSA is an on-policy technique that learns worth primarily based on the present motion ‘a’ extracted from its present coverage. While these strategies are easy to implement, they lack generality as they cannot estimate values for unobserved states.

This limitation is addressed by extra superior algorithms like Deep Q-Networks (DQNs). DQNs make use of Neural Networks to estimate Q-values, thereby enabling worth estimates for unseen states. However, DQNs are solely able to dealing with discrete, low-dimensional motion areas.

To sort out challenges in high-dimensional, steady motion areas, Deep Deterministic Policy Gradient (DDPG) was developed. DDPG is a model-free, off-policy, actor-critic algorithm that learns insurance policies successfully in such complicated eventualities. The picture beneath presents a illustration of the actor-critic structure, which kinds the basis of the DDPG algorithm.

The Practical Applications of Reinforcement Learning:

- A Wide Spectrum Given that Reinforcement Learning (RL) depends closely on substantial quantities of information, it finds its handiest use in domains the place simulated information is quickly accessible, comparable to in gameplay and robotics.

One of the most distinguished makes use of of RL is in growing synthetic intelligence for laptop video games. AlphaGo Zero stands as a shining instance, being the first laptop program to defeat a world champion in the historical Chinese recreation of Go. Other situations embrace the creation of AI for ATARI video games, Backgammon, and extra.

In the subject of robotics and industrial automation, RL is employed to equip robots with an environment friendly, adaptive management system that learns from their very own experiences and conduct. A noteworthy instance is DeepThoughts’s analysis on Deep Reinforcement Learning for Robotic Manipulation with Asynchronous Policy updates. Here’s an attention-grabbing demonstration video of the similar.

Beyond video games and robotics, RL has discovered purposes in quite a few different areas. It powers abstractive textual content summarization engines and dialog brokers (textual content, speech) that be taught from consumer interactions and evolve over time. In healthcare, RL aids in discovering optimum remedy insurance policies. The finance sector additionally leverages RL, deploying RL-based brokers for on-line inventory buying and selling. These wide-ranging purposes underscore the potential and flexibility of RL in sensible eventualities.

Reinforcement Learning – A Vital Building Block in AI’s Future

As we attain the finish of this important information to Reinforcement Learning (RL), we hope you’ve gained helpful insights into the fascinating world of RL and its broad-ranging purposes. From gaming to healthcare, RL is proving to be a transformative power in a number of industries.

At its coronary heart, RL is about studying from expertise. It encapsulates the timeless precept of trial and error, demonstrating how studying from our actions and their penalties can lead to optimized outcomes. It’s this very essence that enables RL algorithms to work together dynamically with their setting and learn the way to maximize their reward.

The RL journey includes studying the fundamentals of the RL drawback, understanding how to formulate it, after which shifting on to discover numerous algorithms comparable to Q-learning, SARSA, DQNs, and DDPG. Each of those algorithms brings distinctive parts to the desk, making them appropriate for various conditions and necessities.

While RL is at present being utilized in numerous domains, it is simply the tip of the iceberg. Its potential is immense, and the way forward for AI will undoubtedly witness a better affect of RL in shaping our world. As AI continues to evolve, mastering the fundamentals of RL will equip you with an important skillset to navigate and contribute to this quickly advancing subject.

In conclusion, Reinforcement Learning will not be merely one other machine studying approach, however slightly a key that opens up new realms of potentialities in synthetic intelligence. By frequently enhancing its methods primarily based on suggestions, RL serves as a driving power in AI’s quest in direction of mimicry and even perhaps surpassing human studying effectivity. As we forge forward into the future, the significance of understanding and making use of RL ideas will solely amplify. So, preserve exploring, continue to learn, and bear in mind – the future belongs to those that be taught.

The put up Mastering the Basics: An Essential Guide to Reinforcement Learning appeared first on Datafloq.