5 Hard Truths About Generative AI for Technology Leaders

GenAI is in all places you look, and organizations throughout industries are placing stress on their groups to hitch the race – 77% of enterprise leaders concern they’re already lacking out on the advantages of GenAI.

Data groups are scrambling to reply the decision. But constructing a generative AI mannequin that really drives enterprise worth is laborious.

And in the long term, a fast integration with the OpenAI API will not lower it. It’s GenAI, however the place’s the moat? Why ought to customers choose you over ChatGPT?

That fast verify of the field looks like a step ahead, however when you aren’t already fascinated by the right way to join LLMs together with your proprietary information and enterprise context to truly drive differentiated worth, you are behind.

That’s not hyperbole. I’ve talked with half a dozen information leaders simply this week on this subject alone. It wasn’t misplaced on any of them that it is a race. At the end line there are going to be winners and losers. The Blockbusters and the Netflixes.

If you are feeling just like the starter’s gun has gone off, however your staff continues to be on the beginning line stretching and chatting about “bubbles” and “hype,” I’ve rounded up 5 laborious truths to assist shake off the complacency.

Hard fact #1: Your generative AI options usually are not properly adopted and sluggish to monetize.

“Barr, if GenAI is so essential, why are the present options we have applied so poorly adopted?”

Well, there are just a few causes. One, your AI initiative wasn’t constructed as a response to an inflow of well-defined consumer issues. For most information groups, that is since you’re racing and it is early and also you wish to achieve some expertise. However, it will not be lengthy earlier than your customers have an issue that is greatest solved by GenAI, and when that occurs – you’ll have a lot better adoption in comparison with your tiger staff brainstorming methods to tie GenAI to a use case.

And as a result of it is early, the generative AI options which were built-in are simply “ChatGPT however over right here.”

Let me provide you with an instance. Think a couple of productiveness software you may use on a regular basis to share organizational information. An app like this may provide a characteristic to execute instructions like “Summarize this,” “Make longer” or “Change tone” on blocks of unstructured textual content. One command equals one AI credit score.

Yes, that is useful, however it’s not differentiated.

Maybe the staff decides to purchase some AI credit, or perhaps they only merely click on over on the different tab and ask ChatGPT. I do not wish to fully overlook or low cost the advantage of not exposing proprietary information to ChatGPT, however it’s additionally a smaller resolution and imaginative and prescient than what’s being painted on earnings calls throughout the nation.

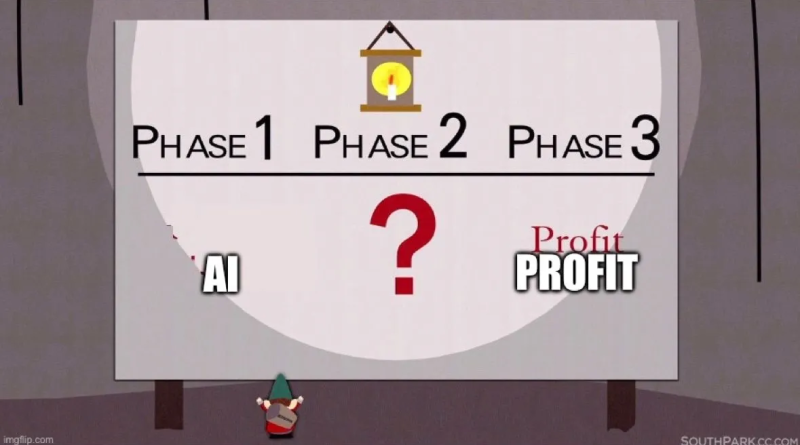

That pesky center step from idea to worth. Image courtesy of Joe Reis on Substack.

So take into account: What’s your GenAI differentiator and worth add? Let me provide you with a touch: high-quality proprietary information.

That’s why a RAG mannequin (or generally, a positive tuned mannequin) is so essential for Gen AI initiatives. It offers the LLM entry to that enterprise proprietary information. (I’ll clarify why under.)

Hard fact #2: You’re scared to do extra with Gen AI.

It’s true: generative AI is intimidating.

Sure, you possibly can combine your AI mannequin extra deeply into your group’s processes, however that feels dangerous. Let’s face it: ChatGPT hallucinates and it may well’t be predicted. There’s a information cutoff that leaves customers prone to out-of-date output. There are authorized repercussions to information mishandlings and offering customers misinformation, even when unintentional.

Sounds actual sufficient, proper? Llama 2 positive thinks so. Image courtesy of Pinecone.

Your information mishaps have penalties. And that is why it is important to know precisely what you might be feeding GenAI and that the information is correct.

In an nameless survey we despatched to information leaders asking how distant their staff is from enabling a Gen AI use case, one response was, “I do not suppose our infrastructure is the factor holding us again. We’re treading fairly cautiously right here – with the panorama shifting so quick, and the chance of reputational harm from a ‘rogue’ chatbot, we’re holding hearth and ready for the hype to die down a bit!”

This is a extensively shared sentiment throughout many information leaders I converse to. If the information staff has immediately surfaced customer-facing, safe information, then they’re on the hook. Data governance is an enormous consideration and it is a excessive bar to clear.

These are actual dangers that want options, however you will not remedy them by sitting on the sideline. There can be an actual danger of watching your corporation being essentially disrupted by the staff that figured it out first.

Grounding LLMs in your proprietary information with positive tuning and RAG is an enormous piece to this puzzle, however it’s not straightforward…

Hard fact #3: RAG is difficult.

I consider that RAG (retrieval augmented era) and positive tuning are the centerpieces of the way forward for enterprise generative AI. But though RAG is the easier method normally, creating RAG apps can nonetheless be complicated.

Can’t all of us simply begin RAGing? What’s the massive deal? Image courtesy of Reddit.

RAG may look like the apparent resolution for customizing your LLM. But RAG improvement comes with a studying curve, even for your most proficient information engineers. They have to know immediate engineering, vector databases and embedding vectors, information modeling, information orchestration, information pipelines and all for RAG. And, as a result of it is new (launched by Meta AI in 2020), many corporations simply do not but have sufficient expertise with it to ascertain greatest practices.

(*5*)

RAG software structure. Image courtesy of Databricks.

Here’s an oversimplification of RAG software structure:

- RAG structure combines data retrieval with a textual content generator mannequin, so it has entry to your database whereas attempting to reply a query from the consumer.

- The database must be a trusted supply that features proprietary information, and it permits the mannequin to include up-to-date and dependable data into its responses and reasoning.

- In the background, a information pipeline ingests varied structured and unstructured sources into the database to maintain it correct and up-to-date.

- The RAG chain takes the consumer question (textual content) and retrieves related information from the database, then passes that information and the question to the LLM so as to generate a extremely correct and customized response.

There are loads of complexities on this structure, however it does have essential advantages:

- It grounds your LLM in correct proprietary information, thus making it a lot extra priceless.

- It brings your fashions to your information relatively than bringing your information to your fashions, which is a comparatively easy, cost-effective method.

We can see this changing into a actuality within the Modern Data Stack. The largest gamers are working at a breakneck pace to make RAG simpler by serving LLMs inside their environments, the place enterprise information is saved. Snowflake Cortex now allows organizations to rapidly analyze information and construct AI apps straight in Snowflake. Databricks’ new Foundation Model APIs present instantaneous entry to LLMs straight inside Databricks. Microsoft launched Microsoft Azure OpenAI Service and Amazon lately launched the Amazon Redshift Query Editor.

Snowflake information cloud. Image courtesy of Medium.

I consider all of those options have a superb probability of driving excessive adoption. But, additionally they heighten the give attention to information high quality in these information shops. If the information feeding your RAG pipeline is anomalous, outdated, or in any other case untrustworthy information, what’s the way forward for your generative AI initiative?

Hard fact #4: Your information is not prepared but anyway.

Take a superb, laborious take a look at your information infrastructure. Chances are when you had an ideal RAG pipeline, positive tuned mannequin, and clear use case able to go tomorrow (and would not that be good?), you continue to would not have clear, well-modeled datasets to plug all of it into.

Let’s say you need your chatbot to interface with a buyer. To do something helpful, it must find out about that group’s relationship with the shopper. If you are an enterprise group in the present day, that relationship is probably going outlined throughout 150 information sources and 5 siloed databases…3 of that are nonetheless on-prem.

If that describes your group, it is doable you’re a 12 months (or two!) away out of your information infrastructure being GenAI prepared.

Which means if you would like the choice to do one thing with GenAI sometime quickly, you might want to be creating helpful, extremely dependable, consolidated, well-documented datasets in a contemporary information platform… yesterday. Or the coach goes to name you into the sport and your pants are going to be down.

Your information engineering staff is the spine for making certain information well being. And, a fashionable information stack allows the information engineering staff to repeatedly monitor information high quality into the long run.

It’s 2024 now. Launching a web site, software, or any information product with out information observability is a danger. Your information is a product, and it requires information observability and information governance to pinpoint information discrepancies earlier than they transfer by means of a RAG pipeline.

Hard fact #5: You’ve sidelined important Gen AI gamers with out realizing it.

Generative AI is a staff sport, particularly with regards to improvement. Many information groups make the error of excluding key gamers from their Gen AI tiger groups, and that is costing them in the long term.

Who ought to be on an AI tiger staff? Leadership, or a main enterprise stakeholder, to spearhead the initiative and remind the group of the enterprise worth. Software engineers to develop the code, the consumer dealing with software and the API calls. Data scientists to contemplate new use instances, positive tune your fashions, and push the staff in new instructions. Who’s lacking right here?

Data engineers.

Data engineers are important to Gen AI initiatives. They’re going to have the ability to perceive the proprietary enterprise information that gives the aggressive benefit over a ChatGPT, and they are going to construct the pipelines that make that information out there to the LLM by way of RAG.

If your information engineers aren’t within the room, your tiger staff is just not at full energy. The most pioneering corporations in GenAI are telling me they’re already embedding information engineers in all improvement squads.

Winning the GenAI race

If any of those laborious truths apply to you, don’t be concerned. Generative AI is in such nascent levels that there is nonetheless time to start out again over, and this time, embrace the problem.

Take a step again to grasp the shopper wants an AI mannequin can remedy, deliver information engineers into earlier improvement levels to safe a aggressive edge from the beginning, and take the time to construct a RAG pipeline that may provide a gradual stream of high-quality, dependable information.

And, spend money on a contemporary information stack. Tools like information observability will probably be a core element of information high quality greatest practices – and generative AI with out high-quality information is only a complete lotta’ fluff.

The put up 5 Hard Truths About Generative AI for Technology Leaders appeared first on Datafloq.