How to Evaluate the Best Data Observability Tools

Data observability has been certainly one of the hottest rising knowledge engineering applied sciences the final a number of years.

This momentum exhibits no indicators of stopping with knowledge high quality and reliability turning into a central subject in the knowledge product and AI conversations happening throughout organizations of every kind and sizes.

Benefits of knowledge observability embrace:

- Increasing knowledge belief and adoption

- Mitigating operational, reputational, and compliance dangers related to unhealthy knowledge

- Boosting income

- Reducing time and assets related to knowledge high quality (extra environment friendly DataOps)

Following Monte Carlo’s creation of the knowledge observability class in 2019, various knowledge observability instruments have entered the market at varied ranges of maturity.

In this submit, we are going to share analyst experiences and the core analysis standards we see organizations use when rating knowledge observability options.

Finally, we’ll share our perspective on various knowledge observability distributors, from relative new-comers to open-source stop-gaps.

What are knowledge observability instruments?

Data observability refers to a corporation’s complete understanding of the well being and reliability of their knowledge and knowledge programs. In different phrases, they assist knowledge groups be the first to know when knowledge breaks and the way to repair it.

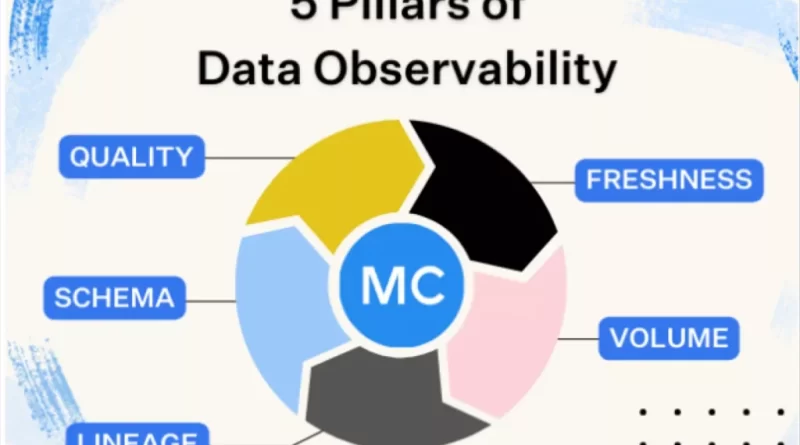

The objective of knowledge observability instruments is to scale back knowledge downtime by automating or accelerating the detection, administration and backbone of knowledge high quality incidents. The core options of knowledge observability instruments had been initially outlined throughout 5 pillars that included 4 forms of machine studying anomaly detection displays and knowledge lineage:

- Freshness– Did the knowledge arrive when it was anticipated?

- Volume– Did we obtain too many or too few rows?

- Schema– Did the construction of the knowledge change in a means that can break knowledge property downstream?

- Quality– Are the values of the knowledge itself inside a standard vary? Has there been a spike in NULLs or a drop in proportion of distinctive values?

- Lineage– How does the knowledge move by way of your tables and programs throughout your fashionable knowledge stack? This is essential to each prioritizing incident response and discovering the root trigger.

If an answer doesn’t have options masking these 5 pillars then it can’t be thought of a knowledge observability software. However, whereas the 5 pillars are important to the knowledge observability class, they aren’t the solely dimensions by way of which these instruments must be evaluated.

Key options of information observability instruments: The analyst perspective

Let’s check out what some key business analysts have pointed to as key analysis standards for knowledge observability instruments.

Gartner

While Gartner hasn’t produced a knowledge observability magic quadrant or report rating knowledge observability distributors, they’ve named it certainly one of the hottest rising applied sciences and positioned it on the 2023 Data Management Hype Cycle.

They say knowledge and analytics leaders ought to, “Explore the knowledge observability instruments accessible in the market by investigating their options, upfront setup, deployment fashions and doable constraints. Also think about the way it suits to total knowledge ecosystems and the way it interoperates with the present instruments.”

We anticipate Gartner will proceed to evolve and add to their steering on knowledge observability instruments this yr.

GigaOm

GigaOm’s Data Observability Radar Report covers the downside knowledge observability instruments look to resolve saying, “Data observability is essential for countering, if not eliminating, knowledge downtime, during which the outcomes of analytics or the efficiency of purposes are compromised due to unhealthy, inaccurate knowledge.”

The authors embrace a listing of key standards and a listing of analysis metrics.

Key standards embrace:

- Schema change monitoring

- Data pipeline help

- AIOps

- Advanced knowledge high quality

- Edge capabilities

Evaluation metrics:

- Contextualization

- Ease of connectability or configurability

- Security and compliance

- BI-like expertise

- Reusability

The analyst’s take at the conclusion of the report additionally highlights the significance of end-to-end protection and root trigger analysis-two options we consider are important components for evaluating knowledge observability instruments as properly.

Ventana

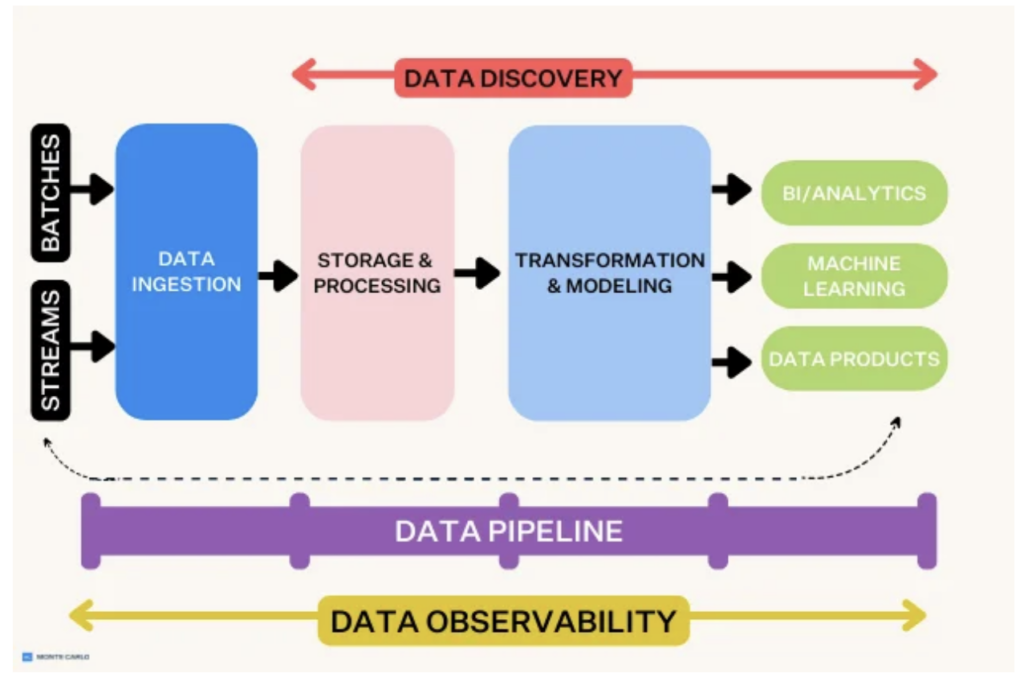

The Ventana Research Buyers Guide does a great job capturing the essence of those instruments saying, “knowledge observability instruments monitor not simply the knowledge in a person setting for a selected objective at a given cut-off date, but in addition the related upstream and downstream knowledge pipelines.”

They additionally used normal dimensions of SaaS platforms in how they ranked distributors:

- Adaptability

- Capability

- Manageability

- Reliability

- Usability

- Customer Experience

- TCO/ROI

- Validation

But, product functionality is the highest weighted at 25% of the analysis. Here Ventana actually hit the nail on the head saying that the finest knowledge observability options transcend detection to deal with decision, prevention and different workflows:

“The analysis largely focuses on how distributors apply knowledge observability and the particular processes the place some specialize, comparable to the detection of knowledge reliability points, in contrast to decision and prevention. Vendors which have extra breadth and depth and help the complete set of wants fared higher than others. Vendors who specialise in the detection of knowledge reliability points didn’t carry out in addition to the others.”

G2 Crowd

G2 was certainly one of the earliest non-vendor assets to put collectively a credible listing of information observability distributors and a definition for the class. They say:

To qualify for inclusion in the G2 Crowd knowledge observability class, a product should:

- Proactively monitor, alert, observe, log, evaluate, and analyze knowledge for any errors or points throughout the complete knowledge stack

- Monitor knowledge at relaxation and knowledge in movement, and doesn’t require knowledge extraction from present storage location

- Connect to an present stack with none want to write code or modify knowledge pipelines

Vendors are evaluated by verified customers of the product throughout a listing of organizational and product particular capabilities together with:

- Quality of help

- Ease of admin

- Ease of use

- Integrations

- Alerting

- Monitoring

- Product route

- Automation

- Single pane view

Key options of information observability instruments: our perspective

Our buyer’s wants are by no means removed from our thoughts after we consider knowledge observability as a class and our personal function roadmap. In addition to the 5 pillars, we consider the following key worth propositions for a knowledge observability resolution are:

- Enterprise readiness

- End-to-end protection

- Seamless incident administration

- Integrated knowledge lineage

- Comprehensive root trigger evaluation

- Quick time-to-value

- AI prepared

Let’s check out every.

Enterprise readiness

Data is like fashion-it’s ever evolving. You do not want one other vendor, you want a knowledge observability supplier that may function a strategic advisor. Someone that’s going to be innovating alongside you for the long-haul and guarantee your operationalization is knowledgeable by finest practices.

Vendors will promise the world, however can they ship if they’re 12 individuals in a storage? Will they be round subsequent yr?

These are vital questions to reply by way of buyer reference calls and an understanding of their total maturity. As we noticed above, these dimensions are additionally properly coated throughout analyst opinions.

Some key areas to consider for enterprise readiness embrace:

- Security– Do they’ve SOC II certification? Robust function based mostly entry controls?

- Architecture– Do they’ve a number of deployment choices for the stage of management over the connection? How does it influence knowledge warehouse/lakehouse efficiency?

- Usability– This might be subjective and superficial throughout a committee POC so it is vital to stability this with the perspective from precise customers. Otherwise you would possibly over-prioritize how fairly an alert seems versus points that can prevent time comparable to capability to bulk replace incidents or having the ability to deploy monitors-as-code.

- Scalability– This is vital for small organizations and important for bigger ones. We all know the nature of knowledge and knowledge-driven organizations lends itself to quick, and at occasions sudden progress. What are the largest deployments? Has this group confirmed its capability to develop alongside its buyer base? Other key options right here embrace issues like capability to help domains, reporting, change logging, and extra. These sometimes aren’t flashy options so many distributors do not prioritize them.

- Support– Data observability is not only a know-how, it is an operational course of. The maturity of the vendor’s buyer success group can influence your stage of success as can help SLAs (the vendor does not even have help SLAs? Red flag!).

- Innovation historical past and roadmap– The knowledge world modifications quickly and as we enter the AI period, you want a accomplice that has a historical past of being on the forefront of those tendencies. Fast followers are sometimes something however, with comparative options shipped 6 months to a yr later. That’s 25 in chief knowledge officer years! Cloud-native options typically have a leg up right here.

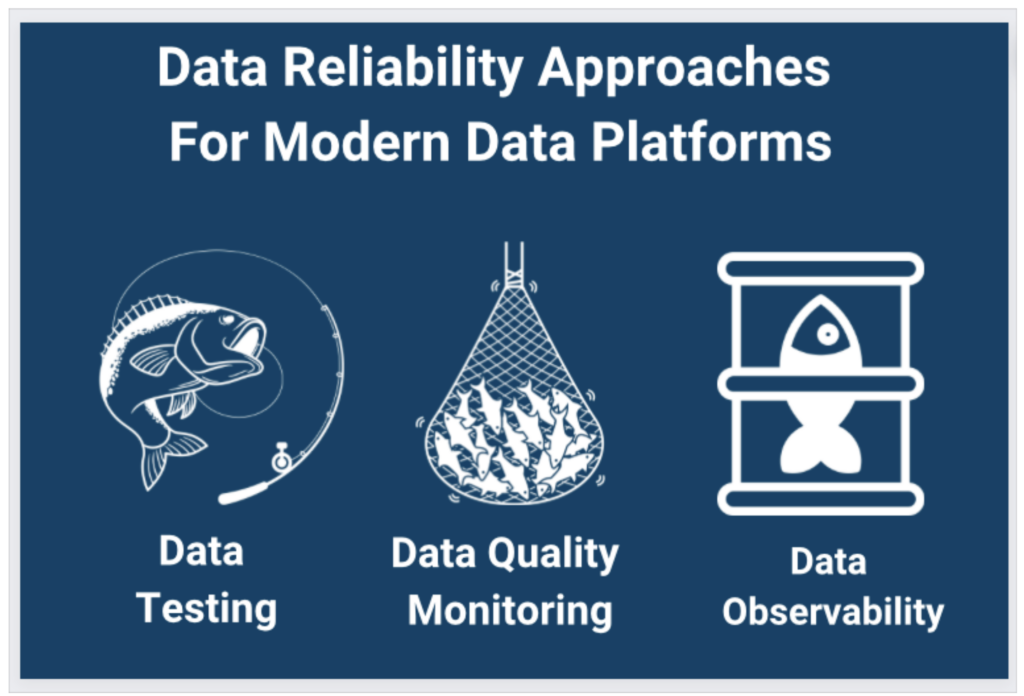

End-to-end protection

The true energy of knowledge observability instruments lies in its capability to combine throughout fashionable knowledge platform layers to create end-to-end visibility into your essential pipelines.

Don’t fish with a line, shoot fish in a barrel. (Yes, now we have reasoning behind this convoluted analogy).

For years, knowledge testing-whether it was hardcoded, dbt exams, or another kind of unit test-was the main mechanism to catch unhealthy knowledge.

While nonetheless related in the proper context, the downside with knowledge testing as an entire observe is that you just could not probably write a check for each single means your knowledge might break. No matter how properly your pipelines, unknown unknowns will nonetheless be a truth of life. And even should you might determine each potential break (which you’ll’t), you definitely would not have the ability to scale your testing to account for each as your setting grew. That leaves quite a lot of cracks in your pipelines to fill.

Data observability instruments ought to provide each broad automated metadata monitoring throughout all the tables as soon as they’ve been added to your chosen schemas, in addition to deep monitoring for points inherent in the knowledge itself.

A robust knowledge observability software may also combine broadly and strong throughout your fashionable knowledge platform, from ingestion to BI and consumption, and allow fast time-to-value by way of easy plug and play integrations.

Be positive to confirm that your chosen resolution affords tooling integrations for every of the layers you may want to monitor so as to validate the high quality of your knowledge merchandise, in addition to integrations into present workflows with instruments like Slack, Microsoft Teams, Jira, and GitHub. Speaking of..

Seamless incident administration

Most knowledge groups we discuss to initially have a detection centered mind-set because it relates to knowledge high quality, probably shaped from their expertise with knowledge testing.

The fantastic thing about knowledge observability is that not solely are you able to catch extra significant incidents, however the finest options may also embrace options that enhance and speed up your capability to handle incidents. Bad knowledge is inevitable and having instruments to mitigate its influence supplies super worth.

There are just a few areas to consider when it comes to incident administration:

- Impact evaluation: How have you learnt if an incident is essential and requires prioritizing? Easy-you take a look at the influence. Data observability instruments that present automated column-level lineage out-of-the-box may also generally present an influence radius dashboard to illustrate how far a top quality problem has prolonged from its root. This may help knowledge engineers perceive at a look what number of groups or merchandise have been impacted by a specific problem and who wants to be stored knowledgeable because it strikes by way of triage and backbone.

- Internal group collaboration: Once an alert has triggered there wants to be a course of for assigning and probably transferring possession surrounding the incident. This might contain integrating with exterior ticket administration options like JIRA or ServiceNow, or some groups might select to handle the incident lifecycle inside the knowledge observability software itself. Either means, it is useful to have the flexibility to do each.

- Proactive communication with knowledge shoppers: When shoppers use unhealthy knowledge to make choices, you lose knowledge belief. Data observability options ought to have means for proactively speaking with knowledge shoppers the present well being of specific datasets or knowledge merchandise.

Comprehensive root trigger evaluation

What is your normal root trigger evaluation course of? Does it really feel disjointed hopping throughout a number of instruments? How lengthy does it take to resolve a problem?

Data can go unhealthy in quite a lot of methods. A complete knowledge observability software ought to make it easier to determine if the root trigger is a matter with the knowledge, system, or code.

For instance, the knowledge might be unhealthy from the supply. If an utility went buggy and also you began seeing an abnormally low gross sales worth from orders in New York, that may be thought of a knowledge problem.

Alternatively, a knowledge setting is made up of a panoply of irreducibly advanced programs that every one want to work in tandem to ship beneficial knowledge merchandise to your downstream shoppers. Sometimes the problem is hidden inside this net of dependencies. If you had an Airflow job that brought about your knowledge to fail, the actual offender would not be the knowledge however a system problem.

Or if a nasty dbt mannequin or knowledge warehouse question change finally broke the knowledge product downstream, that may be thought of a code problem.

An intensive knowledge observability software would have the ability to precisely determine these points and supply the correct context to assist your group remediate every at its supply.

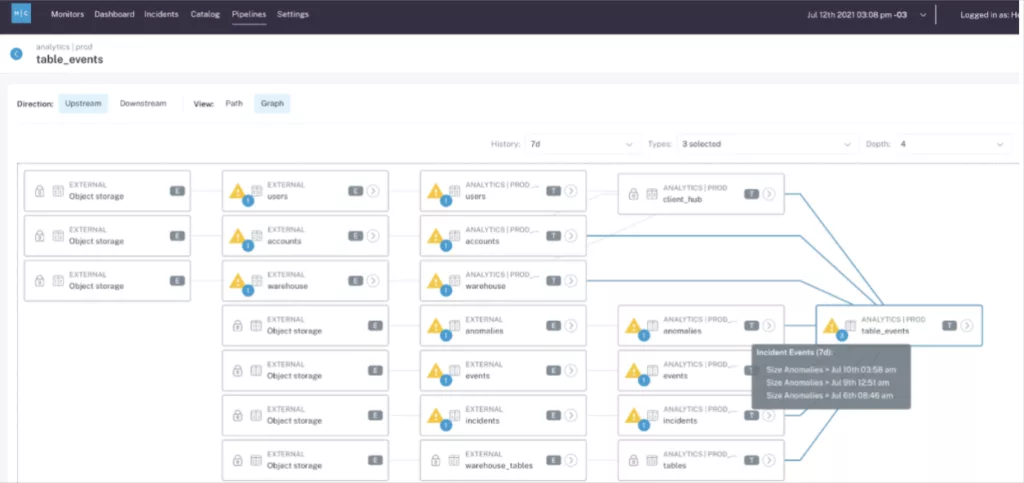

Integrated column-level knowledge lineage

Lineage is a dependency map that enables you to visualize the move of knowledge by way of your pipelines and simplify root trigger evaluation and remediation.

While quite a lot of instruments like dbt will present lineage mapping at the desk stage, only a few lengthen that lineage into the columns of a desk or present how that knowledge flows throughout your entire programs. Sometimes known as “field-level lineage,” column-level lineage maps the dependencies between knowledge units and tables throughout knowledge merchandise to perceive visually how knowledge strikes by way of your pipelines.

In this situation, a knowledge observability resolution with lineage might ship 1 alert whereas a knowledge high quality resolution with out lineage might ship 13.

It’s additionally vital that your knowledge lineage and knowledge incident detection options work as an built-in resolution inside the identical platform. A key motive for that is that lineage grouped alerting not solely reduces alert fatigue, however helps inform a extra cohesive story when an occasion impacts a number of tables.

Rather than getting 12 jumbled chapters which may be a part of one or two tales, you might be getting an alert with the full story and desk of contents.

Quick Time To Value

Data observability is meant to scale back work-not to add extra.

If a knowledge observability software is offering the proper integrations and automatic displays to your setting out-of-the-box, it is going to be fast to implement and ship close to speedy time-to-value for knowledge groups.

A knowledge observability resolution that requires greater than an hour to get arrange and greater than a few days to begin delivering worth, is unlikely to ship the knowledge high quality efficiencies {that a} rising knowledge group would require to scale knowledge high quality long-term.

AI prepared

Building differentiated, helpful generative AI purposes requires first social gathering knowledge. That means knowledge engineers and top quality knowledge are integral to the resolution.

Most knowledge observability options at the moment will monitor the knowledge pipelines powering RAG or high quality tuning use cases-they are basically the identical as knowledge pipelines powering different knowledge merchandise comparable to dashboards, ML purposes, or buyer dealing with knowledge.

However, the generative AI ecosystem is evolving quickly and your knowledge observability vendor wants to be not simply monitoring this evolution however serving to to lead the cost. That means options like observability for vector databases, streaming knowledge sources, and guaranteeing pipelines are as performant as doable.

What’s the future of information observability instruments?

There’s one essential function that we did not point out earlier, that performs an enormous function in the long-term viability of a knowledge observability resolution. And that is class management.

Like any piece of enterprise software program, you are not simply making a choice for the right here and now-you’re betting on the future as properly.

When you select a knowledge observability resolution, you make an announcement about the imaginative and prescient of that firm and the way intently it aligns to your individual long-term objectives. “Will this accomplice make the proper choices to proceed to present sufficient knowledge high quality protection as the knowledge panorama modifications and my very own wants broaden?”

Particularly as AI proliferates, having an answer that can innovate when and the way you want it’s equally as vital as what that platform affords at the moment.

Not solely has Monte Carlo been named a confirmed class chief by the likes of G2, Gartner, Ventana, and the business at giant; however with a dedication to help vector databases for RAG and assist organizations throughout industries energy the way forward for market-ready enterprise AI, Monte Carlo has develop into the de facto chief for AI reliability as properly.

There’s no query that AI is a knowledge product. And with a mission to energy knowledge high quality to your most crucial knowledge merchandise, Monte Carlo is dedicated to serving to you ship the most dependable and beneficial AI merchandise to your stakeholders.

This article was initially printed right here.

The submit How to Evaluate the Best Data Observability Tools appeared first on Datafloq.