How RLHF is Transforming LLM Response Accuracy and Effectiveness

Large language fashions (LLMs) have superior past easy autocompletion, predicting the subsequent phrase or phrase. Recent developments enable LLMs to grasp and comply with human directions, carry out advanced duties, and even interact in conversations. These developments are pushed by fine-tuning LLMs with specialised datasets and reinforcement studying with human suggestions (RLHF). RLHF is redefining how machines be taught and work together with human inputs.

What is RLHF?

RLHF is a method that trains a big language mannequin to align its outputs with human preferences and expectations utilizing human suggestions. Humans consider the mannequin’s responses and present rankings, which the mannequin makes use of to enhance its efficiency. This iterative course of helps LLMs to refine their understanding of human directions and generate extra correct and related output. RLHF has performed a vital function in bettering the efficiency of InstructGPT, Sparrow, Claude, and extra, enabling them to outperform conventional LLMs, equivalent to GPT-3.

Let’s perceive how RLHF works.

RLHF vs Non-RLHF

Large language fashions had been initially designed to foretell the subsequent phrase or token to finish a sentence primarily based on the enter often known as ‘immediate’. For instance, to finish an announcement, you immediate GPT-3 with the next enter:

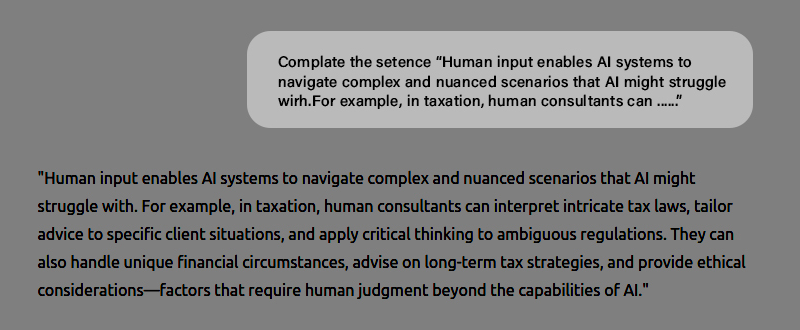

Prompt: Complete the sentence “Human enter allows AI programs to navigate advanced and nuanced eventualities that AI may battle with. For instance, in taxation, human consultants can …….”

The mannequin then efficiently completes the assertion as follows:

“Human enter allows AI programs to navigate advanced and nuanced eventualities that AI may battle with. For instance, in taxation, human consultants can interpret intricate tax legal guidelines, tailor recommendation to particular shopper conditions, and apply vital pondering to ambiguous rules.”

Asking LLM to proceed a immediate

However, giant language fashions are anticipated to do greater than full a immediate. LLMs are required to jot down tales, emails, poems, code, and extra.

RLHF vs Non-RLHF Examples

Here are just a few examples displaying the distinction between non-RLHF LLM (Next token predictor) and RLHF LLM (skilled on human suggestions) output.

Non-RLHF Output – Story

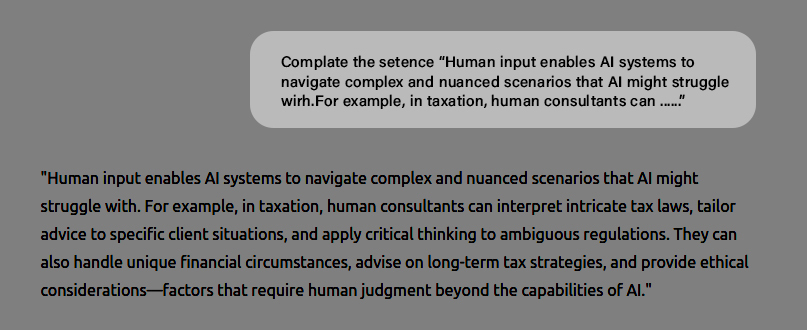

When you inform GPT-3 to ‘write a fictional story about Princess Anastasia falling in love with a soldier’, a non-RLHF mannequin generates output like:

Prompt: Write a fictional story about Princess Anastasia falling in love with a soldier.

However, giant language fashions are anticipated to do greater than full a immediate. LLMs are required to jot down tales, emails, poems, code, and extra.

RLHF vs Non-RLHF Examples

Here are just a few examples displaying the distinction between non-RLHF LLM (Next token predictor) and RLHF LLM (skilled on human suggestions) output.

Non-RLHF Output – Story

When you inform GPT-3 to ‘write a fictional story about Princess Anastasia falling in love with a soldier,’ a non-RLHF mannequin generates output like:

Prompt: Write a fictional story about Princess Anastasia falling in love with a soldier.

The mannequin is aware of easy methods to write tales, however it might’t perceive the request as a result of LLMs are skilled on web scrapes that are much less accustomed to instructions like ‘write a narrative/ electronic mail’, adopted by a narrative or electronic mail itself. Predicting the subsequent phrase is essentially completely different from intelligently following directions.

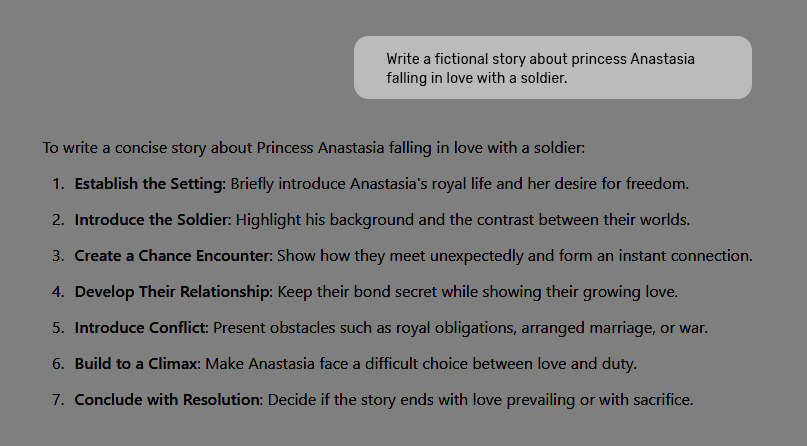

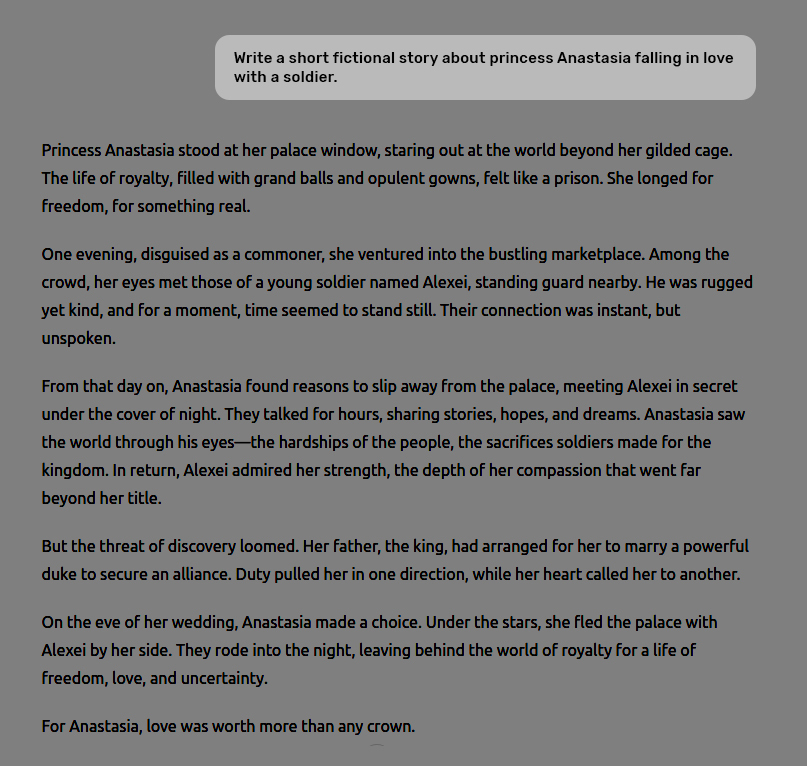

RLHF Output – Story

Here is what you get when the identical immediate is supplied to an RLHF mannequin skilled on human suggestions.

Prompt: Write a fictional story about Princess Anastasia falling in love with a soldier.

Now, the LLM generated the specified reply.

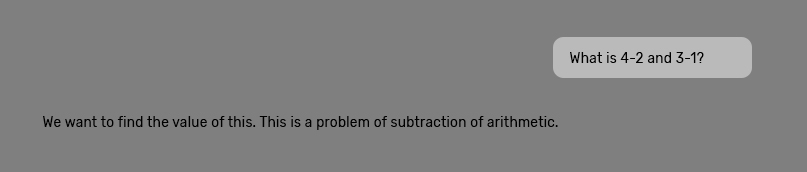

Non-RLHF Output – Mathematics

Prompt: What is 4-2 and 3-1?

The non-RLHF mannequin doesn’t reply the query and takes it as a part of a narrative dialogue.

RLHF Output – Mathematics

Prompt: What is 4-2 and 3-1?

The RLHF mannequin understands the immediate and generates the reply accurately.

How does RLHF Work?

Let’s perceive how a big language mannequin is skilled on human suggestions to reply appropriately.

Step 1: Starting with Pre-trained Models

The strategy of RLHF begins with a pre-trained language mode or a next-token predictor.

Step 2: Supervised Model Fine-tuning

Multiple enter prompts in regards to the duties you need the mannequin to finish and a human-written excellent response to every immediate are created. In different phrases, a coaching dataset consisting of <immediate, corresponding excellent output> pairs is created to fine-tune the pre-trained mannequin to generate comparable high-quality responses.

Step 3: Creating a Human Feedback Reward Model

This step includes making a reward mannequin to guage how nicely the LLM output meets high quality expectations. Like an LLM, a reward mannequin is skilled on a dataset of human-rated responses, which function the ‘floor fact’ for assessing response high quality. With sure layers eliminated to optimize it for scoring reasonably than producing, it turns into a smaller model of the LLM. The reward mannequin takes the enter and LLM-generated response as enter and then assigns a numerical rating (a scalar reward) to the response.

So, human annotators consider the LLM-generated output by rating their high quality primarily based on relevance, accuracy, and readability.

Step 4: Optimizing with a Reward-driven Reinforcement Learning Policy

The closing step within the RLHF course of is to coach an RL coverage (basically an algorithm that decides which phrase or token to generate subsequent within the textual content sequence) that learns to generate textual content the reward mannequin predicts people would favor.

In different phrases, the RL coverage learns to assume like a human by maximizing suggestions from the reward mannequin.

This is how a classy giant language mannequin like ChatGPT is created and fine-tuned.

Final Words

Large language fashions have made appreciable progress over the previous few years and proceed to take action. Techniques like RLHF have led to modern fashions equivalent to ChaGPT and Gemini, revolutionizing AI responses throughout completely different duties. Notably, by incorporating human suggestions within the fine-tuning course of, LLMs should not solely higher at following directions however are additionally extra aligned with human values and preferences, which assist them higher perceive the boundaries and functions for which they’re designed.

RLHF is remodeling giant language fashions (LLMs) by enhancing their output accuracy and potential to comply with human directions. Unlike conventional LLMs, which had been initially designed to foretell the subsequent phrase or token, RLHF-trained fashions use human suggestions to fine-tune responses, aligning responses with consumer preferences.

Summary: RLHF is remodeling giant language fashions (LLMs) by enhancing their output accuracy and potential to comply with human directions. Unlike conventional LLMs, which had been initially designed to foretell the subsequent phrase or token, RLHF-trained fashions use human suggestions to fine-tune responses, aligning responses with consumer preferences.

The publish How RLHF is Transforming LLM Response Accuracy and Effectiveness appeared first on Datafloq.