How to perform Web Scraping using Selenium and Python

What is Web Scraping and Why is it used?

Data is a common want to clear up enterprise and analysis issues. Questionnaires, surveys, interviews, and types are all knowledge assortment strategies; nevertheless, they do not fairly faucet into the largest knowledge useful resource obtainable. The Internet is a big reservoir of information on each believable topic. Unfortunately, most web sites don’t enable the choice to save and retain the info which might be seen on their internet pages. Web scraping solves this downside and permits customers to scrape massive volumes of the wanted knowledge.

Web scraping is the automated gathering of content material and knowledge from an internet site or another useful resource obtainable on the web. Unlike display screen scraping, internet scraping extracts the HTML code beneath the webpage. Users can then course of the HTML code of the webpage to extract knowledge and perform knowledge cleansing, manipulation, and evaluation.

Exhaustive quantities of this knowledge may even be saved in a database for large-scale knowledge evaluation tasks. The prominence and want for knowledge evaluation, together with the quantity of uncooked knowledge which might be generated using internet scrapers, has led to the event of tailored python packages which make internet scraping straightforward as pie.

Applications of Web Scraping

- Sentiment evaluation: While most web sites used for sentiment evaluation, resembling social media web sites, have APIs which permit customers to entry knowledge, this isn’t at all times sufficient. In order to acquire knowledge in real-time concerning info, conversations, analysis, and traits it’s typically extra appropriate to internet scrape the info.

- Market Research: eCommerce sellers can monitor merchandise and pricing throughout a number of platforms to conduct market analysis concerning shopper sentiment and competitor pricing. This permits for very environment friendly monitoring of opponents and value comparisons to preserve a transparent view of the market.

- Technological Research: Driverless automobiles, face recognition, and advice engines all require knowledge. Web Scraping typically presents priceless info from dependable web sites and is without doubt one of the most handy and used knowledge assortment strategies for these functions.

- Machine Learning: While sentiment evaluation is a well-liked machine studying algorithm, it is just one in all many. One factor all machine studying algorithms have in widespread, nevertheless, is the massive quantity of information required to prepare them. Machine studying fuels analysis, technological development, and general progress throughout all fields of studying and innovation. In flip, internet scraping can gasoline knowledge assortment for these algorithms with nice accuracy and reliability.

Understanding the Role of Selenium and Python in Scraping

Python has libraries for nearly any goal a consumer can assume up, together with libraries for duties resembling internet scraping. Selenium includes a number of totally different open-source tasks used to perform browser automation. It helps bindings for a number of common programming languages, together with the language we will probably be using on this article: Python.

Initially, Selenium with Python was developed and used primarily for cross-browser testing; nevertheless, over time extra artistic use instances resembling selenium and python internet scrapping have been discovered.

Selenium makes use of the Webdriver protocol to automate processes on numerous common browsers resembling Firefox, Chrome, and Safari. This automation might be carried out domestically (for functions resembling testing an internet web page) or remotely (for internet scraping).

Example: Web Scraping the Title and all Instances of a Keyword from a Specified URL

The normal course of adopted when performing internet scraping is:

- Use the webdriver for the browser getting used to get a selected URL.

- Perform automation to acquire the knowledge required.

- Download the content material required from the webpage returned.

- Perform knowledge parsing and manipulation on the content material.

- Reformat, if wanted, and retailer the info for additional evaluation.

In this instance, consumer enter is taken for the URL of an article. Selenium is used together with BeautifulSoup to scrape and then perform knowledge manipulation to acquire the title of the article, and all situations of a consumer enter key phrase present in it. Following this, a depend is taken of the variety of instances discovered of the key phrase, and all this textual content knowledge is saved and saved in a textual content file known as article_scraping.txt.

How to perform Web Scraping using Selenium and Python

Pre-Requisites:

- Set up a Python Environment.

- Install Selenium v4. If you’ve got conda or anaconda arrange then using the pip bundle installer can be probably the most environment friendly technique for Selenium set up. Simply run this command (on anaconda immediate, or straight on the Linux terminal):

pip set up selenium

- Download the most recent WebDriver for the browser you would like to use, or set up webdriver_manager by working the command, additionally set up BeautifulSoup:

pip set up webdriver_manager

pip set up beautifulsoup4

Step 1: Import the required packages.

from selenium import webdriverfrom selenium.webdriver.chrome.service import Servicefrom selenium.webdriver.assist.ui import WebDriverWaitfrom selenium.webdriver.assist import expected_conditions as ECfrom bs4 import BeautifulSoupimport codecsimport refrom webdriver_manager.chrome import ChromeDriverManager

Selenium is required so as to perform internet scraping and automate the chrome browser we’ll be using. Selenium makes use of the webdriver protocol, subsequently the webdriver supervisor is imported to acquire the ChromeDriver appropriate with the model of the browser getting used. BeautifulSoup is required as an HTML parser, to parse the HTML content material we scrape. Re is imported so as to use regex to match our key phrase. Codecs are used to write to a textual content file.

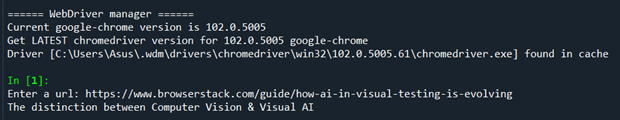

Step 2: Obtain the model of ChromeDriver appropriate with the browser getting used.

driver=webdriver.Chrome(service=Service(ChromeDriverManager().set up()))

Step 3: Take the consumer enter to acquire the URL of the web site to be scraped, and internet scrape the web page.

val = enter("Enter a url: ")wait = WebDriverWait(driver, 10)driver.get(val)

get_url = driver.current_url

wait.till(EC.url_to_be(val))

if get_url == val:

page_source = driver.page_source

The driver is used to get this URL and a wait command is utilized in order to let the web page load. Then a test is finished using the present URL technique to be sure that the proper URL is being accessed.

Step 4: Use BeautifulSoup to parse the HTML content material obtained.

soup = BeautifulSoup(page_source,options="html.parser")

key phrase=enter("Enter a key phrase to discover situations of within the article:")

matches = soup.physique.find_all(string=re.compile(key phrase))len_match = len(matches)title = soup.title.textual content

The HTML content material internet scraped with Selenium is parsed and made right into a soup object. Following this, consumer enter is taken for a key phrase for which we’ll search the article’s physique. The key phrase for this instance is “knowledge“. The physique tags within the soup object are looked for all situations of the phrase “knowledge” using regex. Lastly, the textual content within the title tag discovered inside the soup object is extracted.

Step 4: Store the info collected right into a textual content file.

file=codecs.open('article_scraping.txt', 'a+')file.write(title+"n")file.write("The following are all situations of your key phrase:n")depend=1for i in matches:file.write(str(depend) + "." + i + "n")depend+=1file.write("There have been "+str(len_match)+" matches discovered for the key phrase."file.shut()driver.stop()

Use codecs to open a textual content file titled article_scraping.txt and write the title of the article into the file, following this quantity, and append all situations of the key phrase inside the article. Lastly, append the variety of matches discovered for the key phrase within the article. Close the file and stop the motive force.

Output:

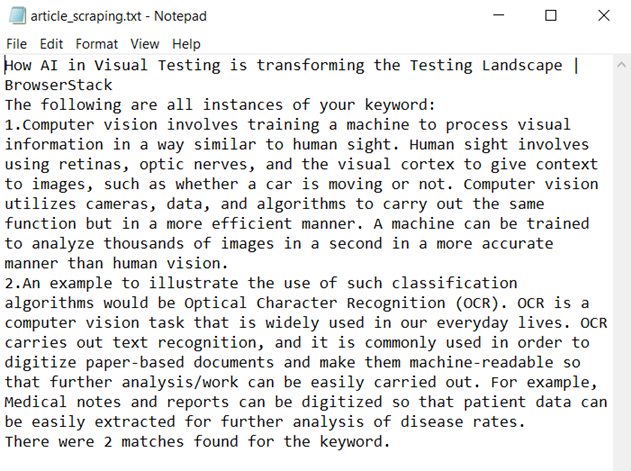

Text File Output:

The title of the article, the 2 situations of the key phrase, and the variety of matches discovered might be visualized on this textual content file.

How to use tags to effectively accumulate knowledge from web-scraped HTML pages:

print([tag.name for tag in soup.find_all()])print([tag.text for tag in soup.find_all()])

The above code snippet can be utilized to print all of the tags discovered within the soup object and all textual content inside these tags. This might be useful to debug code or find any errors and points.

Other Features of Selenium with Python

You can use a few of Selenium’s inbuilt options to perform additional actions or maybe automate this course of for a number of internet pages. The following are a few of the most handy options provided by Selenium to perform environment friendly Browser Automation and Web Scraping with Python:

- Filling out types or finishing up searches

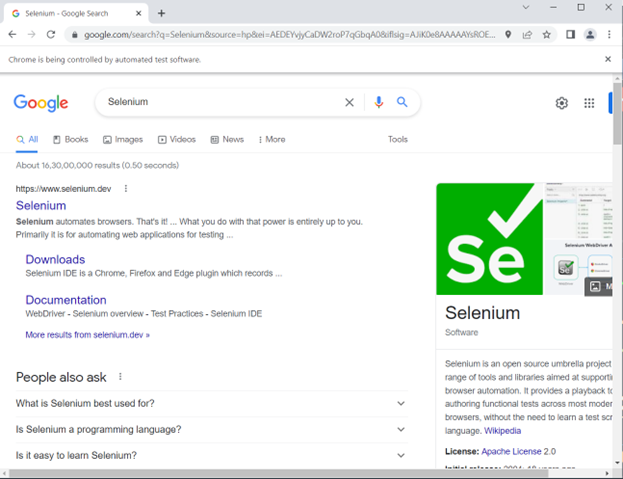

Example of Google search automation using Selenium with Python.

from selenium import webdriverfrom selenium.webdriver.chrome.service import Servicefrom webdriver_manager.chrome import ChromeDriverManagerfrom selenium.webdriver.widespread.keys import Keysfrom selenium.webdriver.widespread.by import By

driver = webdriver.Chrome(service=Service(ChromeDriverManager().set up()))

driver.get("https://www.google.com/")search = driver.find_element(by=By.NAME,worth="q")search.send_keys("Selenium")search.send_keys(Keys.ENTER)

First, the motive force hundreds google.com, which finds the search bar using the title locator. It varieties “Selenium” into the searchbar and then hits enter.

Output:

- Maximizing the window

driver.maximize_window()

- Taking Screenshots

driver.save_screenshot('article.png')

- Using locators to discover parts

Let’s say we do not need to get the complete web page supply and as a substitute solely need to internet scrape a choose few parts. This might be carried out by using Locators in Selenium.

These are a few of the locators appropriate to be used with Selenium:

- Name

- ID

- Class Name

- Tag Name

- CSS Selector

- XPath

Example of scraping using locators:

from selenium import webdriverfrom selenium.webdriver.chrome.service import Servicefrom selenium.webdriver.assist.ui import WebDriverWaitfrom selenium.webdriver.assist import expected_conditions as ECfrom selenium.webdriver.widespread.by import Byfrom webdriver_manager.chrome import ChromeDriverManager

driver = webdriver.Chrome(service=Service(ChromeDriverManager().set up()))

val = enter("Enter a url: ")

wait = WebDriverWait(driver, 10)driver.get(val)get_url = driver.current_urlwait.till(EC.url_to_be(val))if get_url == val:header=driver.find_element(By.ID, "toc0")print(header.textual content)

This instance’s enter is identical article because the one in our internet scraping instance. Once the webpage has loaded the aspect we wish is straight retrieved by way of ID, which might be discovered by using Inspect Element.

Output:

The title of the primary part is retrieved by using its locator “toc0” and printed.

- Scrolling

driver.execute_script("window.scrollTo(0, doc.physique.scrollHeight);")

This scrolls to the underside of the web page and is commonly useful for web sites which have infinite scrolling.

Conclusion

This information defined the method of Web Scraping, Parsing, and Storing the Data collected. It additionally explored Web Scraping particular parts using locators in Python with Selenium. Furthermore, it offered steering on how to automate an internet web page in order that the specified knowledge might be retrieved. The info offered ought to show to be of service to perform dependable knowledge assortment and perform insightful knowledge manipulation for additional downstream knowledge evaluation.

It is advisable to run Selenium Tests on an actual gadget cloud for extra correct outcomes because it considers actual consumer circumstances whereas working checks. With BrowserStack Automate, you’ll be able to entry 3000+ actual device-browser combos and take a look at your internet software totally for a seamless and constant consumer expertise.

The submit How to perform Web Scraping using Selenium and Python appeared first on Datafloq.